Solution Overview

Our solution lets you take complex models with multiple variable inputs—where brute force or grid-based searches are simply not feasible—and efficiently determine the optimal model configuration or perform advanced analytics.

Easy model integration

Simple integration with existing models is achieved through a Python wrapper system, requiring minimal adjustments to existing models as long as the simulation can be parameterized and return a performance metric.

Distributed microservices architecture

We use a microservices approach with dedicated containers for simulations, optimizations, and data handling. This is built on established technologies (Python, Kubernetes, RabbitMQ, REST APIs), ensuring robustness and scalability.

Flexible framework

Our solution comes with built-in heuristic optimizers such as particle swarm optimization (PSO), genetic algorithms (GA), and simulated annealing (SA). Containers for bespoke workloads like Monte Carlo or attribution analysis can easily be added to the framework due to the object-oriented interface.

The Orchestrator

It is also responsible for coordinating high-level workflows, monitoring task statuses, and returning results or progress updates back to the calling client.

The orchestrator can be used directly via HTTP requests or wired up to a suitable front-end application.

Message-driven Coordination

Requests for simulations and optimizations are published to queues (we use RabbitMQ) and results are also returned via queue. Multiple simulator or optimizer containers concurrently consume from these queues, allowing parallel and distributed processing and easy scaling.

This design decouples request initiation from request handling, enabling the system to gracefully manage spikes in workload.

Simulator Containers

Simulator containers each contain the logic or model to run a (parametrized) simulation. (In a bring-your-own-model scenario, the simulators would simply wrap the provided model.)

Because each simulator runs independently and concurrently, horizontal scaling is achieved by simply adding more simulator containers to handle high volumes of simulation tasks. (If necessary, such scaling can be automated.)

Optimizer containers

The optimizer containers implement the optimization algorithms (e.g., PSO, GA, or SA). These algorithms typically follow an iterative approach:

- An initial “population” of candidate parameter sets is generated. Each parameter set is then dispatched to the simulator containers for evaluation.

- When simulators return performance metrics, the optimizer updates the overall “fitness” scores for each candidate.

- Based on these scores, the optimizer generates a new, refined population of parameters, which it again sends out for simulation.

- This loop continues until a convergence criterion is met (e.g., a defined number of iterations or a target performance threshold).

Data I/O containers

Separate containers are used to interface with storage systems (data lakes, databases, etc.), ensuring that simulators and optimizers remain focused on computations and do not require direct database connections.

Storing and retrieving large volumes of simulation data can be handled asynchronously, which maintains system responsiveness. Moreover, this decoupled design also permits data caching for performance.

Additional containers

Additional containers for bespoke workloads can easily be added to the framework.

For example, an “analyzer” container that performs Monte Carlo, sensitivity, or perturbation analyses of particular simulation scenarios.

Scalability and deployment

Each functional service (orchestrator, simulator, optimizer, data handler, etc.) runs in its own container for deployment on a Kubernetes (k8s) cluster. This separation ensures that each component can be independently scaled, updated, and maintained, improving overall robustness and flexibility.

We provide configuration for a k0s-based k8s cluster, and the entire framework is packaged into Helm charts for straightforward deployment and lifecycle management.

The design is cloud-agnostic, enabling easy deployment to AWS, Azure, VPS providers such as Hetzner, or on-premises clusters.

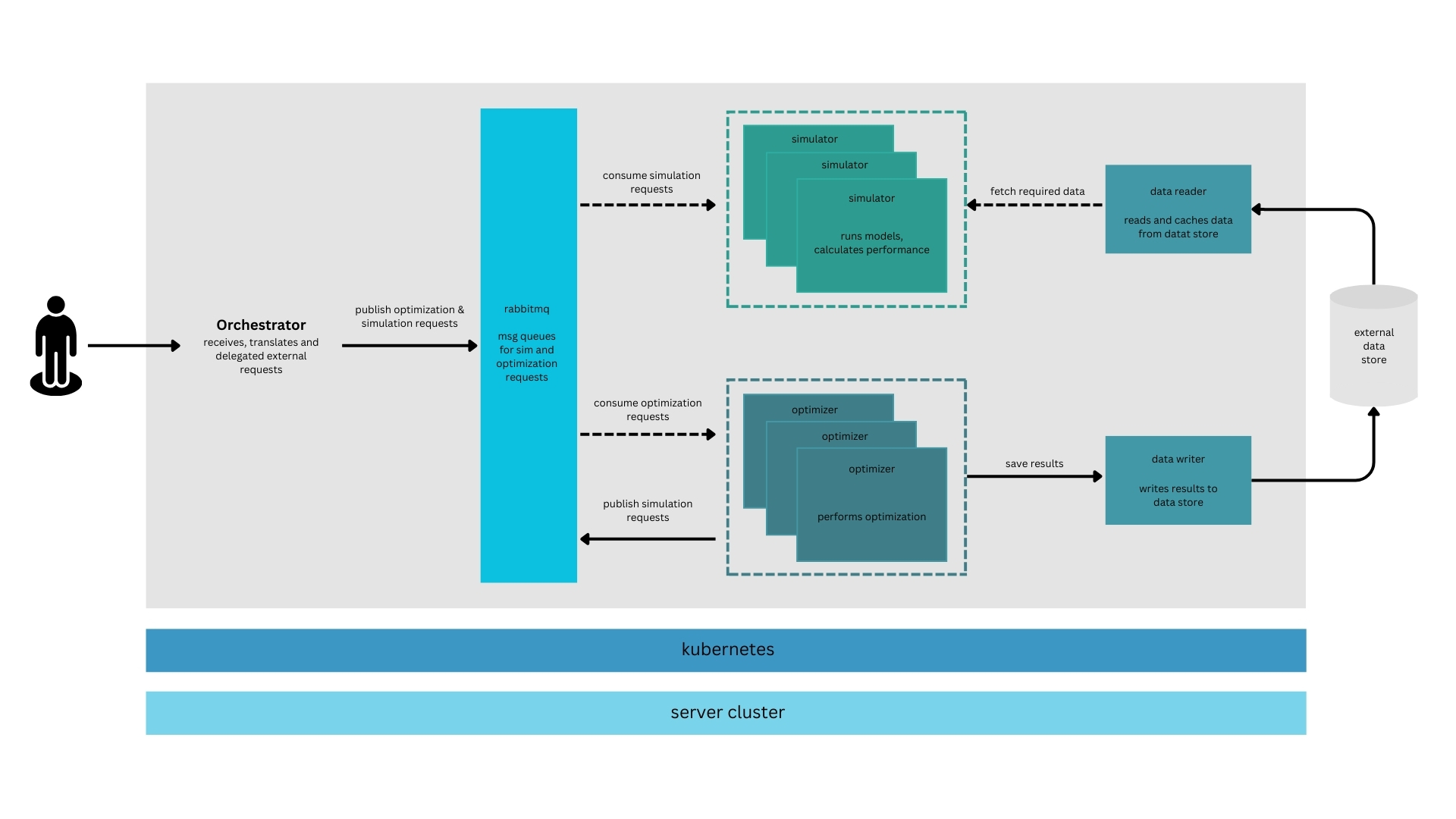

HIGH LEVEL ARCHITECTURE & PROCESS FLOW DIAGRAM

External requests (e.g., for a new optimization) are made via the orchestrator’s REST API. (An operator UI could be used as a frontend, which would then dispatch requests to the API.)

The orchestrator publishes the simulation and optimization requests to the RabbitMQ queues.

The optimizer services retrieve optimization requests from a queue and publish simulation requests to a separate queue as part of the optimization process. (There is typically an iterative process of publishing simulation requests, collecting the results, and publishing new requests.)

The simulator services consume simulation requests (which contain the parameters of the simulation), run them using the embedded model(s), and push the results (performance scores) back to a queue for collection by the optimizer.

The data reader and data writer services interact with external storage for logging, retrieval and/or caching of datasets, and persistence of results.

The entire setup runs on a distributed Kubernetes cluster.